- Published on

Scheduling Algorithms in Operating Systems -- An animated demonstration (Part 1)

- Authors

- Name

- Faddal Ibrahim

- @faddaldotdev

Introduction

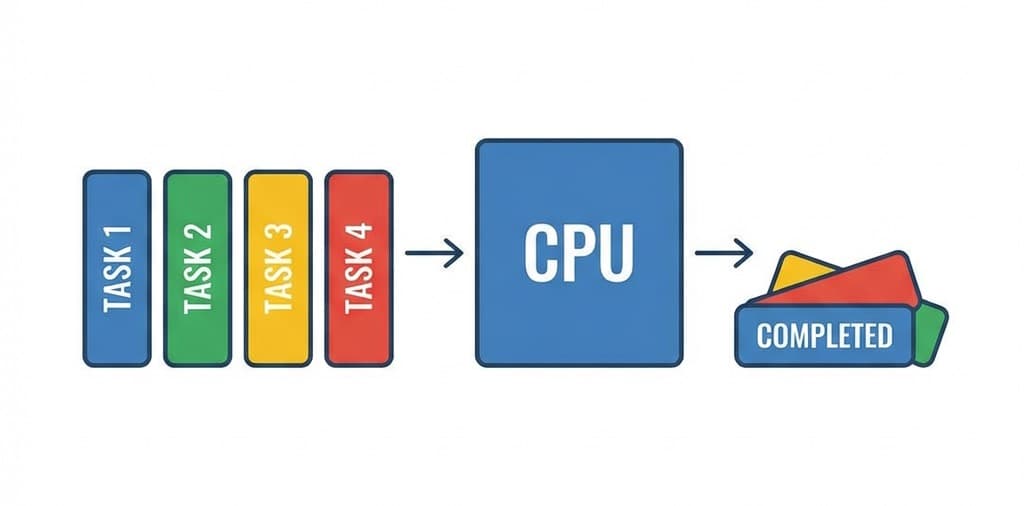

When you are scrolling through the X feed, playing music and typing something on your notes app, to you it might feel like the computer is running all of them at the same time but it's actually not. The trick is through Time Sharing, where each program is run briefly in turns, but this happens so fast that it feels like they are running at the same time.

This illusion is handled by the Operating System (OS)

The hardware components (CPU, RAM etc) are useless until the OS manages them. Like a wand before it's waved by a wizard. In technical terms, we say that the OS virtualizes the hardware. Which is a way to say, it manages the hardware resources and determines what processes (programs) utilize them and in what manner.

If the computer runs all of them at the same time, it will run out of resources and crash. Further more, if done carelessly, it will cause a lot of problems. For example, what if a process runs longer than expected? Or tries to perform restricted operations?

The question then comes, how does the OS decide which process to run first and which to run next? for how long? and when to switch between processes?

Before we answer this, let's explore 2 interesting terms, often confused with each other: Concurrency and Parallelism.

Concurrency vs Parallelism

Imagine 2 tasks that need to be completed in 4 steps each.

With concurrency, the CPU will run Task A for 1 step, then Task B for 1 step, then Task A for another step, then Task B for another step, and so on until the 2 tasks are completed all on a SINGLE CPU Core. The tasks are managed by switching between them until they are completed.

An example is network requests. When an API call is made to an endpoint, it takes a few milliseconds to get a response. During this time, the CPU can run other tasks. This is concurrency. It's useful for when resources are limited, ensuring tasks are managed effectively.

Parallelism is when the CPU can run Task A and Task B at the same time (not in turns) on different CPU cores. This is actual simulataneous execution and requires multiple CPU cores for true parallel execution. This is useful for when resources are abundant, ensuring tasks are executed as fast as possible!

NB: The CPU runs the programs; The OS is the one that manages the CPU and determines which process the CPU should run and when.

So back to our main question, how does the OS decide which process to run next? for how long? when to switch between processes?.

To explore this, we will look at 4 scheduling policies, their trade-offs, and how they work. We will also implement them in TypeScript and visualize them using a simple playground tool I built. (OS Scheduling Algorithms Playground)

Think of these as foundational models for how the OS performs scheduling. Modern CPUs use a hybrid and highly optimized schedulers inspired by these ideas.

First In First Out (FIFO)

This is the most basic scheduling policy. Works exactly as a queue. If 3 jobs A, B, and C with arrival times of 0, 1, and 2 respectively and each running for 10s each, the OS will run Job A first, then Job B, then Job C. Easy!

Typescript Implementation

interface Job {

id: string;

arrivalTime: number;

runningTime: number;

}

const jobs: Job[] = [

{ id: 'B', arrivalTime: 1, runningTime: 10 },

{ id: 'C', arrivalTime: 2, runningTime: 10 },

{ id: 'A', arrivalTime: 0, runningTime: 10 },

];

// sort the jobs based on arrival time ascending.

function fifo(jobs: Job[]) {

return jobs.sort((a, b) => a.arrivalTime - b.arrivalTime);

}

console.log(fifo(jobs));

This works perfectly, but imagine a scenario where Job A takes 100s to run, and Job B takes 1s to run. Job A will take precedence over Job B, even though Job B has a shorter running time. Or worse, Job A takes 1000s to run, and Job B all the way to Job Z take 1s to each run. While we can complete Jobs B to Z in 25s, we will have to wait for Job A's 1000s to finish before we can start them.

Ugh! Of course we dont want this to happen. So what do we do? What if we relax the policy a bit and allow jobs with short run times to run first? Could that be the answer? Let's find out in Part 2.